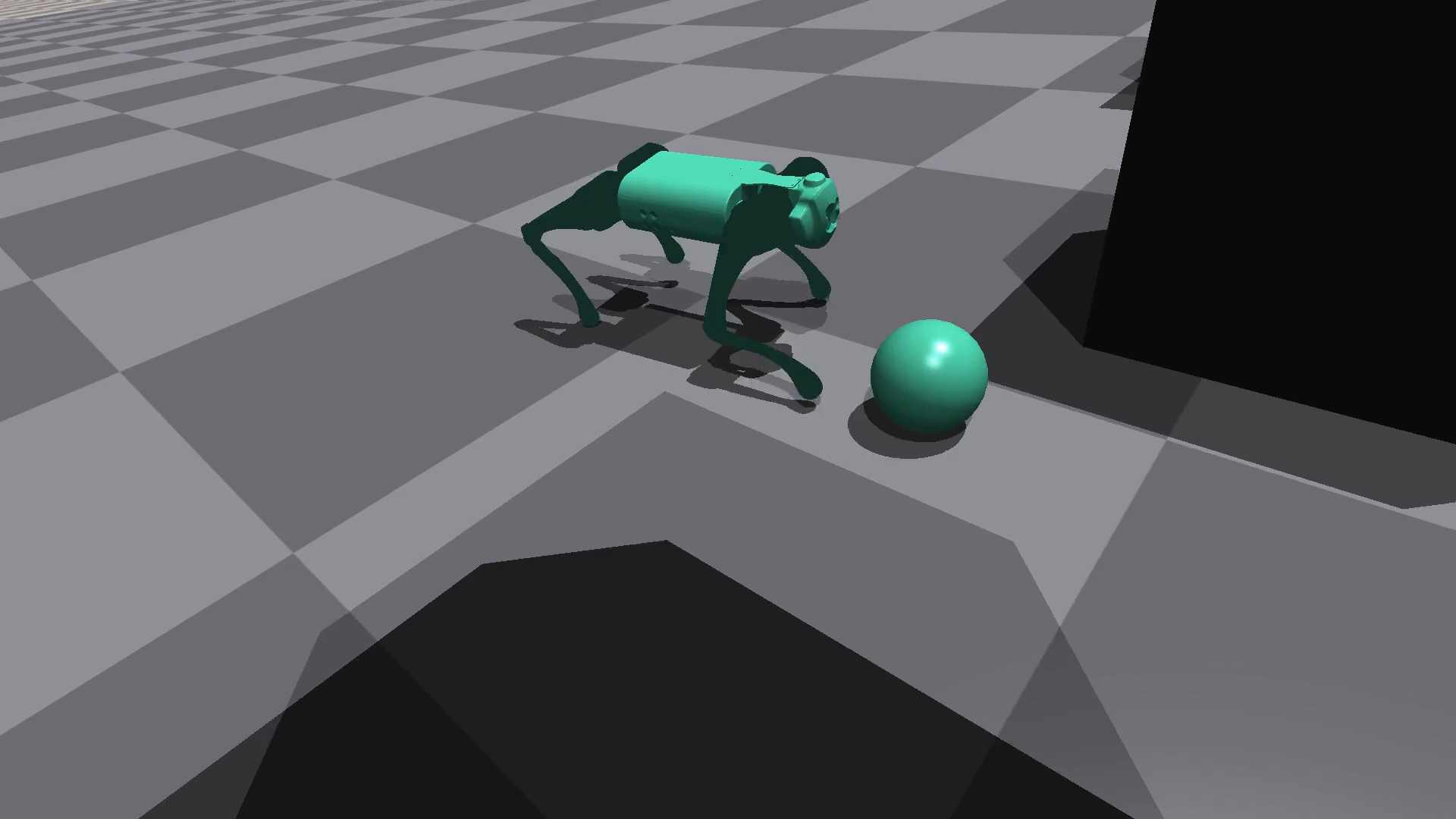

Learning-Based

Quadruped Locomotion &

Dynamic Manipulation

Researching learning-based control strategies for legged robots, with a focus on dynamic locomotion and task-driven behavior in unstructured environments. My work centers on developing reinforcement learning pipelines in simulation and translating them to hardware for robust real-world deployment.

I contribute to end-to-end system development spanning environment design, reward shaping, policy training, and evaluation. This includes integrating learned controllers with robot dynamics models, debugging instability modes, and analyzing sim-to-real gaps under latency, sensing, and contact uncertainty constraints.

My impact

Designed and trained reinforcement learning policies for legged robotic tasks in Isaac Gym

Built custom simulation environments and reward structures to drive stable, task-oriented locomotion

Investigated sim-to-real transfer challenges including actuation delay, state estimation noise, and contact modeling

Contributed to hardware validation and performance analysis of learned controllers